In fact, within my laptop, there are fifty cores divided between my CPU and both of its GPUs. Keep in mind my laptop was made in 2009, so more recent computers may have even more, especially if its a desktop or a computer made for gaming.

With all these cores: there is the potential for massive performance boosts in the software we use everyday.

How do you Compare Performance?

Performance can be measured in FLOPS: Floating Point Operations Per Second, it's a type of metric used in high performance computing. A floating point number is a decimal number, in binary form, used commonly in scientific and engineering simulations, which utilize a lot of floating point numbers. FLOPS are a very broad metic describing how many times you can manipulate floating point numbers in a second. Operations like comparing two numbers or adding, multiplying, etc. |

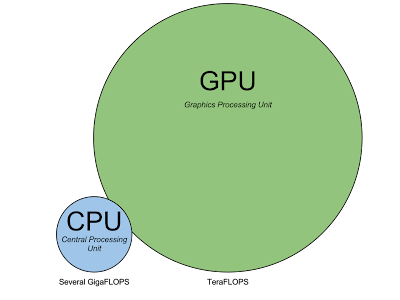

| Relative compute performance in relation to size |

Because of the increased cores, GPUs can do more FLOPS than a CPU can. In many cases, a good GPU is an order of magnitude faster than a good CPU. That means that while a good CPU might be able to pull a few dozen to about a hundred GigaFLOPS, a good GPU could, theoretically, handle TeraFLOPS of compute workloads.

Practical Test

The best GPUs have thousands of cores in them. The Radeon HD 7970 has 2048 programable cores. In contrast: my laptop's best GPU, the GeForce 9600M GT, has just 32 cores. Even still, It's plenty to show off the power of GPUs.

For a test, I used OpenCL, a parallel programming language that can be used to program CPUs and GPUs alike. I wrote an OpenCL program to compute matrix dot products, between matrices of varying sizes. Computing Matrix dot products are a good way to test performance because they require many computations. Furthermore, they're used in a lot of scientific and graphics calculations. To summarize: in the test, I give the different devices, on my computer, a giant work load, to see how long it takes for them all to finish it.

You can download the source code I made for the test. It is free software, you can use it in your own projects.

You can download the source code I made for the test. It is free software, you can use it in your own projects.

Results

Running each of the three OpenCL devices, on my laptop, to compute the dot product between matrices varying from 16x16 to 1024x1024 in size revealed the relative runtimes of each device.

The red plot is my control, it is a naive, single threaded, implementation of a matrix dot product solver. Unsurprisingly, it took the most time. The violet plot, is the amount of time it took both cores of my CPU to compute the different sized matrices, using my OpenCL code. This was much faster, taking less than half the time. The other two lines, if you can see them, are squished along the x-axis. Both my GPUs took almost no time to compute the matrix dot product. To illustrate this more clearly, I present the last three lines of the outputted data.

Runtimes of computing dot products between nxn matrices on different OpenCL devices

| n | Single Threaded | GeForce 9600M GT | GeForce 9400M | Core 2 Duo T9600 |

| 992 | 11416.339 ms | 22.507 ms | 29.262 ms | 4256.869 ms |

| 1008 | 12232.509 ms | 23.188 ms | 30.678 ms | 4754.069 ms |

| 1024 | 12251.256 ms | 24.979 ms | 30.846 ms | 4464.266 ms |

As you can see, while both cores of the CPU combined took nearly 5 seconds to compute a 1024x1024 matrix, A GPU could do it in 30 milliseconds.

In other words, what takes a CPU several seconds, a GPU can do in the blink of an eye.

In other words, what takes a CPU several seconds, a GPU can do in the blink of an eye.

Limitations

If GPUs are so fast, why haven't they replaced CPUs? The answer is: they're not fast all the time. A matrix dot product is an ideal problem for a GPU because it's a fine grained parallel problem. A Fine grained parallel problem is a problem that can be divided into many small, identical pieces. Such a problem can be easily divided across many cores. Not all problems are like that though. Many problems have data dependencies between pieces, need to have pieces be solved one at a time, or can't be broken down at all. A GPU can't handel problems like that, but CPUs are exceedingly good at solving them.